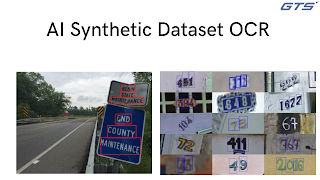

AI Synthetic Dataset Like OCR

Optic character recognition (OCR) technology is a successful business process that can save time, money, and other resources by using automatic data extraction as well as storage abilities. The term "text recognition" is a different name for optical character recognition (OCR). OCR software removes and reuses information from scans of papers photographs taken by cameras, as well as images in pdf files that are only image-based. OCR software extracts letters from images, then converts them into words and later sentences, allowing access and modification of the original content. It is also able to eliminate the requirement to input data by hand.

Synthetic data is created artificially instead of being gathered from the real world and is a result of the needs of dataset of machine learning. At first, data for training required to be collected in order to cover all scenarios to ensure the accuracy of AI model. If a specific scenario did not occur or was recorded in any way, there was no evidence and a massive deficit in machine's ability recognize through Speech Recognition Dataset the situation. Synthetic data allows data to be generated using computer programs to fill the gaps in usage scenarios. This gives greater flexibility to train more diverse models for both services and products in a variety of industries by the creation of a larger array of data. Although the idea of synthetic data may seem recent, it's been in use for quite a long time. The concept is believed to have been invented in the work of Donald Rubin in a 1993 article entitled "Discussion of Statistical Disclosure Limitation," published in The Journal of Official Statistics. The article's focus was the privatization of data. It declared "the suggestion here is not to release real microdata, but only synthetic microdata that are constructed with multiple imputations in order that they are able to be examined by using conventional statistics software." The final result was a dataset that didn't contain any actual data. This is still a significant advantage of synthetic data today. The demands for synthetic data are targeted across a variety of industries, stimulated (pun intended) specifically by the autonomous vehicles sector.

OCR systems transform printed, physical documents into machine-readable text by using the combination of software and hardware. Text is generally read or copied using hardware, like scanning with an optical device or circuit board. Then advanced processing is performed with software.

How Does Synthetic Data Help AI ?

The demand in ML Dataset increased as has the need for data that is synthetic, which can aid businesses in obtaining reliable data for training to enhance their services and products. The data is basing itself on events that have already occurred and may contain personal identifiable data (PII). It's not difficult to eliminate PII from the data prior to making use of it for training purposes, but it's not as simple to create specific situations in the real world to be used to train people. These kinds of situations, often referred to by the name of edge case, is what distinguish synthetic data from data collected by humans.

How Can Synthetic Data Help You?

The primary advantages of using synthetic data are:

- Reduced cost

- Speed up data collection

- Information that's available from PII

- Inclusive datasets

- Access to information for rare events (edge instances)

- Advanced and precise annotations

All of these are excellent reasons to make use of synthetic data, however, it's important to remember that humans play an important role to play in the Data to support an AI Lifecycle . Real-world data is utilized together with synthetic data in order to verify the model's operation is in order. Real-world data may also have some outliers that synthetic data can naturally reflect. While you may program your artificial data to take into account certain situations or edge cases but it won't contain the natural outliers. The synthetic data market will forever require humans to create data in order to be successful. The human generated information is the basis to the software created to generate the data that is synthetic. Since this data from humans is utilized to generate initial data It is important to make sure that the data is of high-quality to ensure that the data generated is the same quality. After data is produced, quality control is used to ensure that there are no errors. To ensure that this is the case the data is validated against the highest quality, human-annotated data. The partnership also has two benefits in addition to enhancing the size of your sample using lower cost data , which requires lesser resources and less time. Because a large portion of data is created by computers, the cost is less, which allows companies to fund further study. The time savings is due to the fact that you can complete the data section that is annotated by a human in a speedy manner. Another thing to note is that data sets are more inclusive. The use of synthetic data guarantees that the data generated is based on a neutral and unifying view, one that is able to be free from bias and other influences, and also include a suitable range of perspectives.

What is the background of Optical Character Recognition?

Ray Kurzweil established Kurzweil Computer Products, Inc. in 1974 with the intention of creating an optical recognition of Omni-font (OCR) system that can recognize texts written in almost all fonts. He determined that the best use for this technology was an automated device that could be used by blind people, and which is why he designed a text-to-speech device for reading. Kurzweil sold his business to Xerox in the year 1980. Xerox had an interest in commercialising paper-to computer conversion of text.

OCR as well as GTS

Global Technology Solutions is a leader in technology, Global Technology Solutions is always working on new and improved software programs for personal and commercial use. We have advanced the optical recognition abilities over the years, combining these capabilities with Artificial Intelligence (AI). We can provide image data collection, Text Dataset collection and speech data and video collection.

Comments

Post a Comment